Introduction

Thanks to rapid cost declines and increasingly performant foundation models, artificial intelligence (AI) is transforming how developers write software and how software will drive productivity. Increasingly, developers are turning to AI-based solutions to accelerate the software development life cycle, including ideation, implementation, testing, deployment, and maintenance.

Large language models have solved basic coding problems for some time. Based on GPT-3, GitHub Codex predated the launch of ChatGPT by a full year. More recent advances in reasoning and context length have turbocharged the use of AI in software development. Reasoning has advanced the ability of a model to plan and iterate its thinking while solving problems, and advances in context length have increased working memory while completing tasks.

According to our research, as software development costs continue to fall, the demand for AI-driven capabilities that deliver much more business impact than prior generations of software should explode. As this software evolves, the best positioned layers of the tech stack will be platform-as-a-service and infrastructure software that use AI and data to build, operate, and secure applications.

As Costs Plummet, Intelligence Is Exploding

While some tech pundits suggest that foundation model performance has plateaued, our research shows that recent breakthroughs have advanced overall capability. Reasoning gives models the ability to think iteratively before responding, while extended context length allows them to reason with much larger volumes of data at inference time. The integration of tool use, which connects models to third-party systems via application programming interface (API), allows models to complete tasks from beginning to end and has transformed AI systems from “chatbots” to “AI agents.”

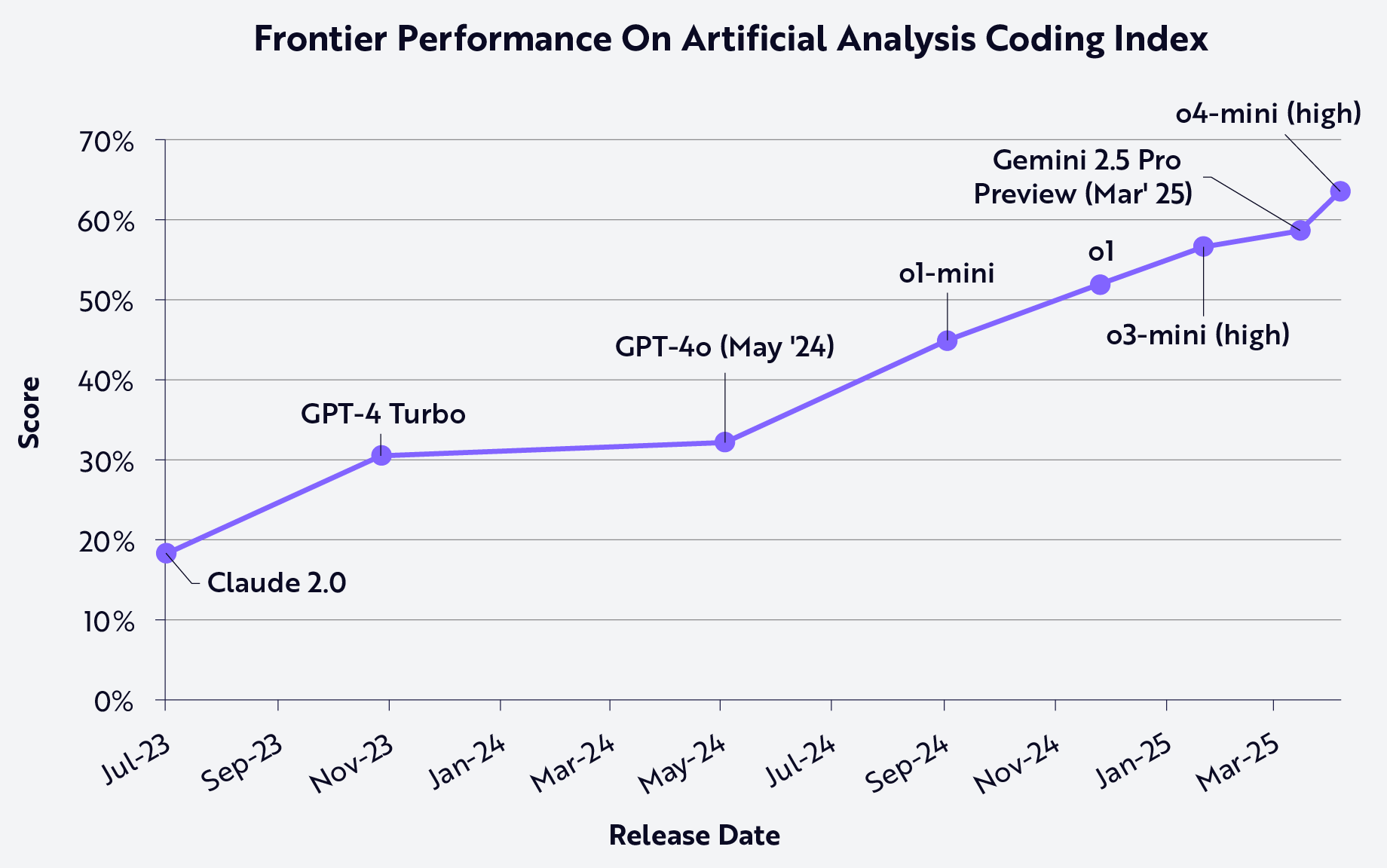

AI coding performance has improved consistently over the last two years, as shown below:

Note: Based on the Artificial Analysis Coding Index, which averages model performance across multiple coding benchmarks. Source: ARK Investment Management LLC, 2025, based on data from Artificial Analysis as of June 30, 2025. For informational purposes only and should not be considered investment advice or a recommendation to buy, sell, or hold any particular security. Past performance is not indicative of future results.

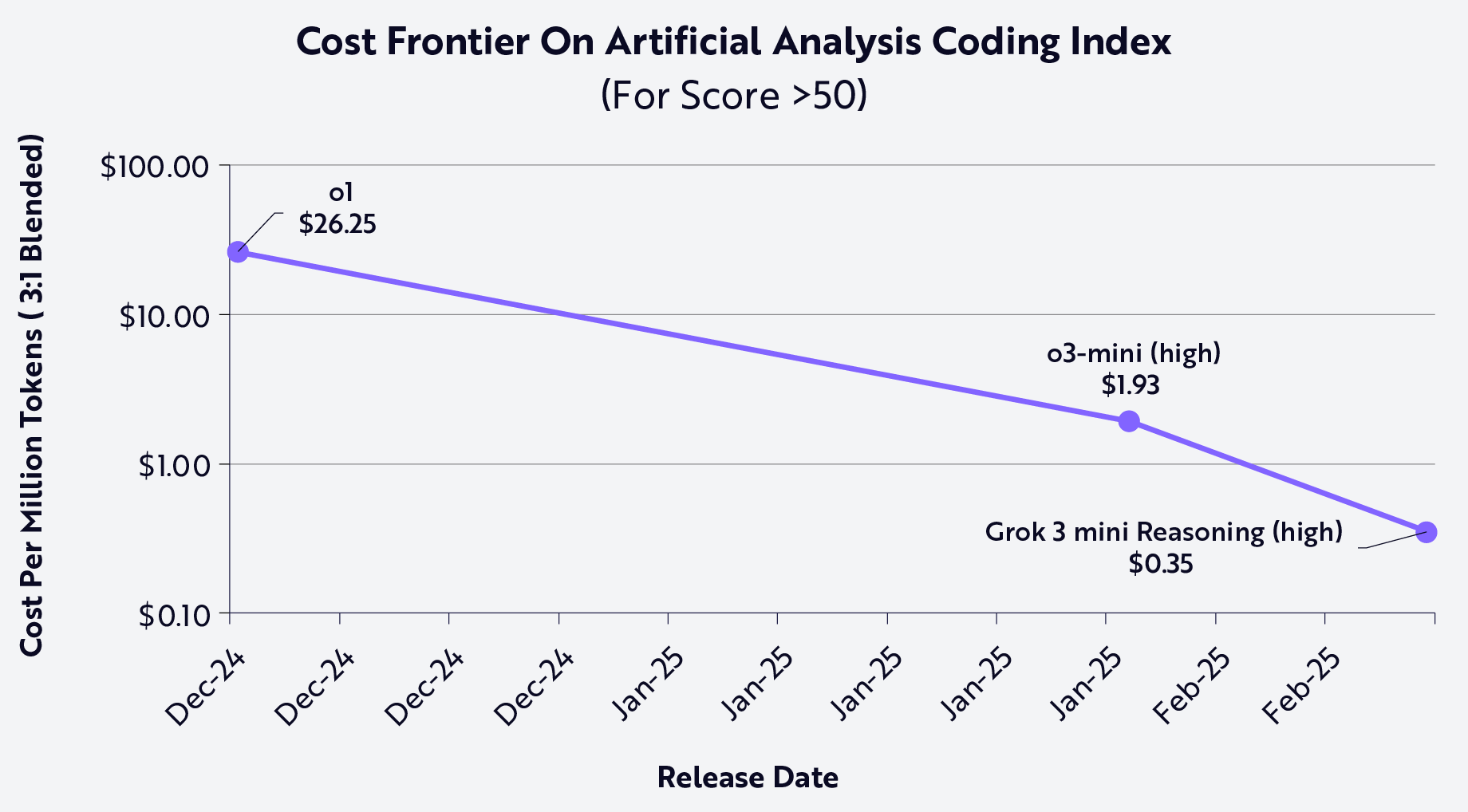

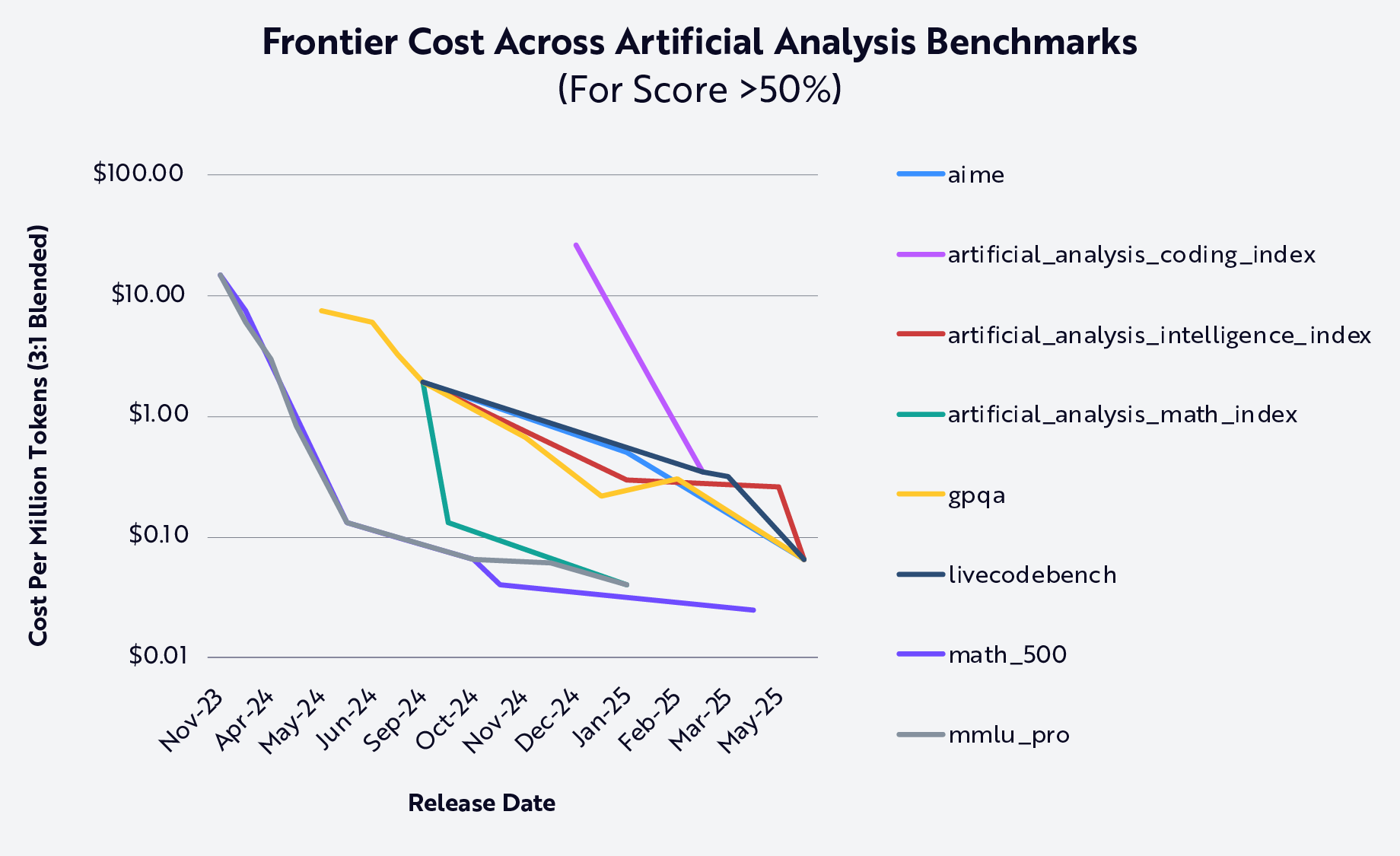

Meanwhile, the cost to use models has plummeted. Based on the same performance measure, for example, in the last six months the minimum cost to use models that score at least 50% on Artificial Analysis’s Coding Index has fallen 98.7%, or by 75 times—from $26.25 per million tokens to just $0.35, as shown below. xAI’s Grok 3 mini reasoning model now is the lowest cost offering that is meeting and exceeding that threshold.

Source: ARK Investment Management LLC, 2025, based on data from Artificial Analysis as of June 30, 2025. For informational purposes only and should not be considered investment advice or a recommendation to buy, sell, or hold any particular security. Past performance is not indicative of future results.

With history as a guide, AI systems should continue to improve in both benchmark and real-world performance as costs decline. Indeed, data from Our World In Data demonstrates that, over the past 25+ years, AI systems have exceeded human level performance across many domains, and that the advances have accelerated in the last 5-10 years, as shown below.

.png)

Source: Kiela et al. 2023/Our World in Data 2024.1 For informational purposes only and should not be considered investment advice or a recommendation to buy, sell, or hold any particular security. Past performance is not indicative of future results.

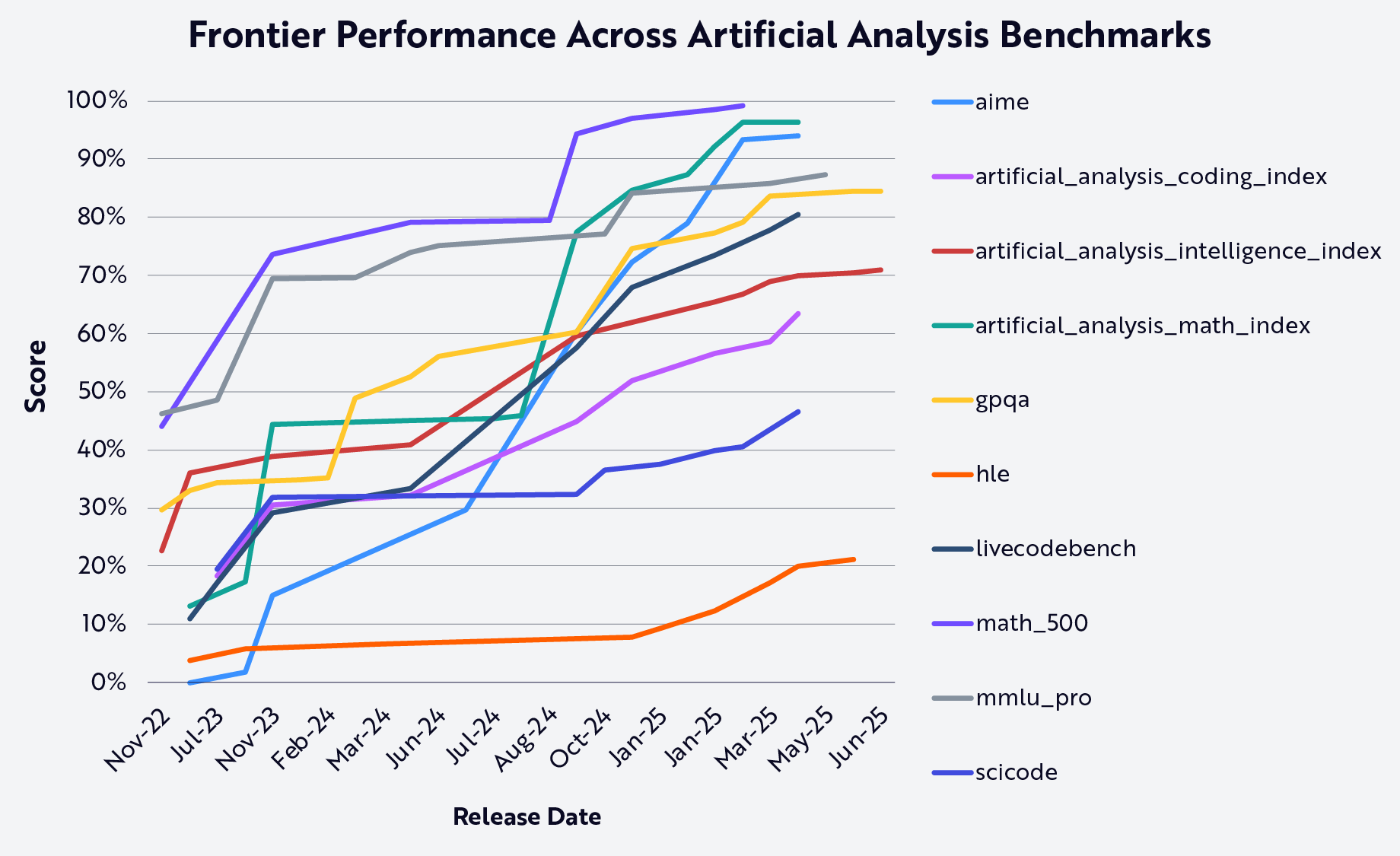

Large language models suggest the same performance relative to cost, as shown in the two charts below.

Note: The precise meaning of each of the measures is provided by Artificial Analysis 2025.2 Definitions may be accessed at https://artificialanalysis.ai/methodology/intelligence-benchmarking. ARK Investment Management LLC, 2025, based on data from Artificial Analysis as of June 30, 2025. For informational purposes only and should not be considered investment advice or a recommendation to buy, sell, or hold any particular security. Past performance is not indicative of future results.

Note: The precise meaning of each of the measures is provided by Artificial Analysis 2025.3 Definitions may be accessed at: https://artificialanalysis.ai/methodology/intelligence-benchmarking. Source: ARK Investment Management LLC, 2025, based on data from Artificial Analysis as of June 30, 2025. For informational purposes only and should not be considered investment advice or a recommendation to buy, sell, or hold any particular security. Past performance is not indicative of future results.

In other words, as costs plummet, AI capability continues to increase across disciplines.

Industry Implications

While no benchmark translates perfectly to real world performance, our analysis suggests a correlation. As predicted by Jevons paradox4 and our research on Wright’s Law,5 demand for tokens is surging as costs are falling. Microsoft recently announced that, during the first quarter, it processed over 100 trillion tokens, a five-fold increase year-over-year while Google’s production of tokens soared 50-fold during the past year.6 Microsoft and Google might not account for tokens in the same way, but their inflection points are consistent.

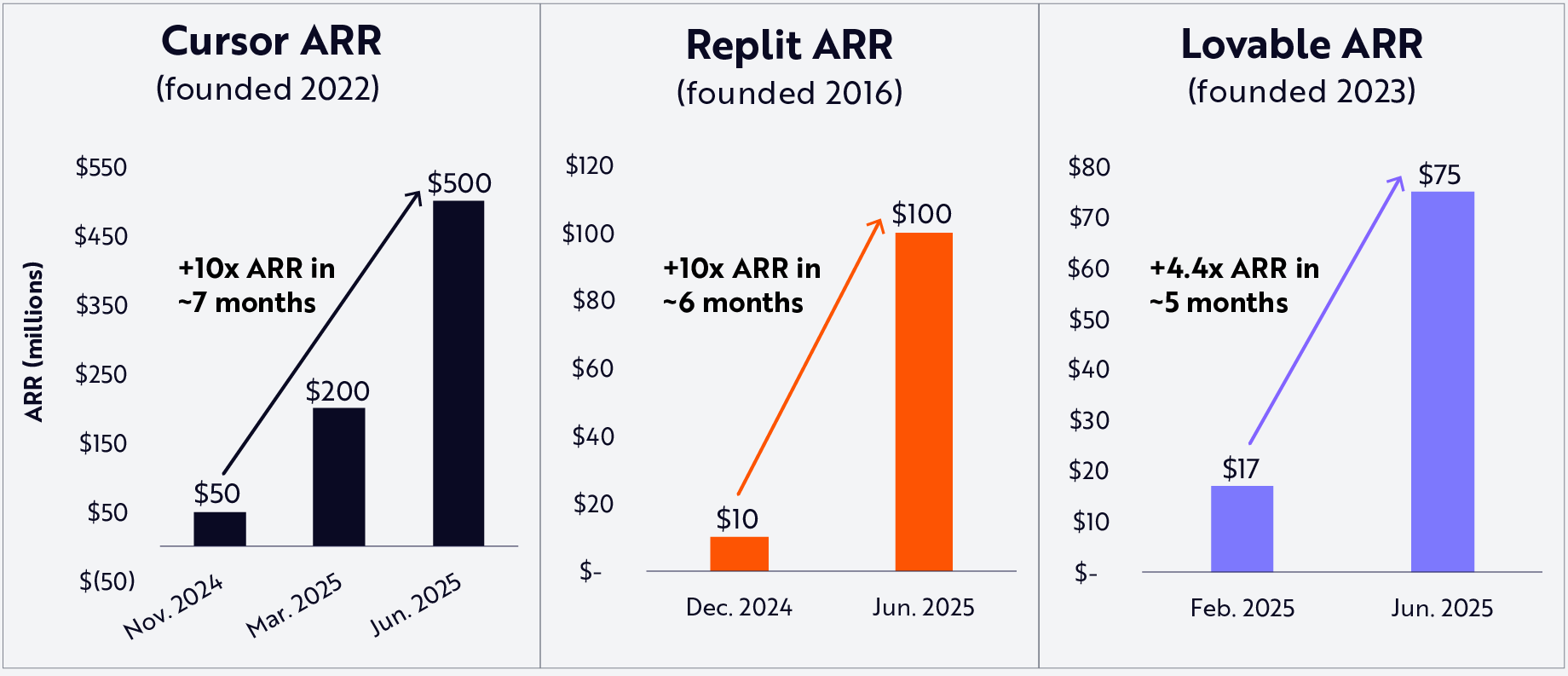

AI-native integrated development environments (IDEs) are some of the fastest growing token users. With product suites that integrate AI-assistance deeply into the software development lifecycle, companies like Cursor and Replit are experiencing breakout growth.

Two flywheels appear to be reinforcing each other and driving the rapid adoption of AI IDEs. First, as the capabilities of models increase and their features expand, the products built on top of them improve instantly. Observing that AI IDEs are increasing the consumption of tokens, foundation model companies like OpenAI and Anthropic are incentivized to improve their models. Second, as AI IDEs improve, they attract more users. As their user bases grow, AI IDEs enhance proprietary data to build and fine-tune models for their platforms. Together, these dynamics improve product quality and create differentiated moats relative to competitors building on the same foundation models, a phenomenon recently articulated by Cursor’s Co-Founder and CEO, Michael Truell.7

Indeed, both Cursor and Replit increased run rate revenues 10x between the fourth quarter of 2024 and the first half of 2025, scaling respectively from $50 to $500 million annual run rate (ARR) revenue, and from $10 to $100 million ARR, as shown below. Founded in late 2023, their peer Lovable quadrupled its run rate revenue to $75 million between February and June 2025.

Source: ARK Investment Management LLC, 2025. This ARK analysis draws on a range of external data sources as of June 30, 2025, which may be provided upon request. For informational purposes only and should not be considered investment advice or a recommendation to buy, sell, or hold any particular security. Past performance is not indicative of future results.

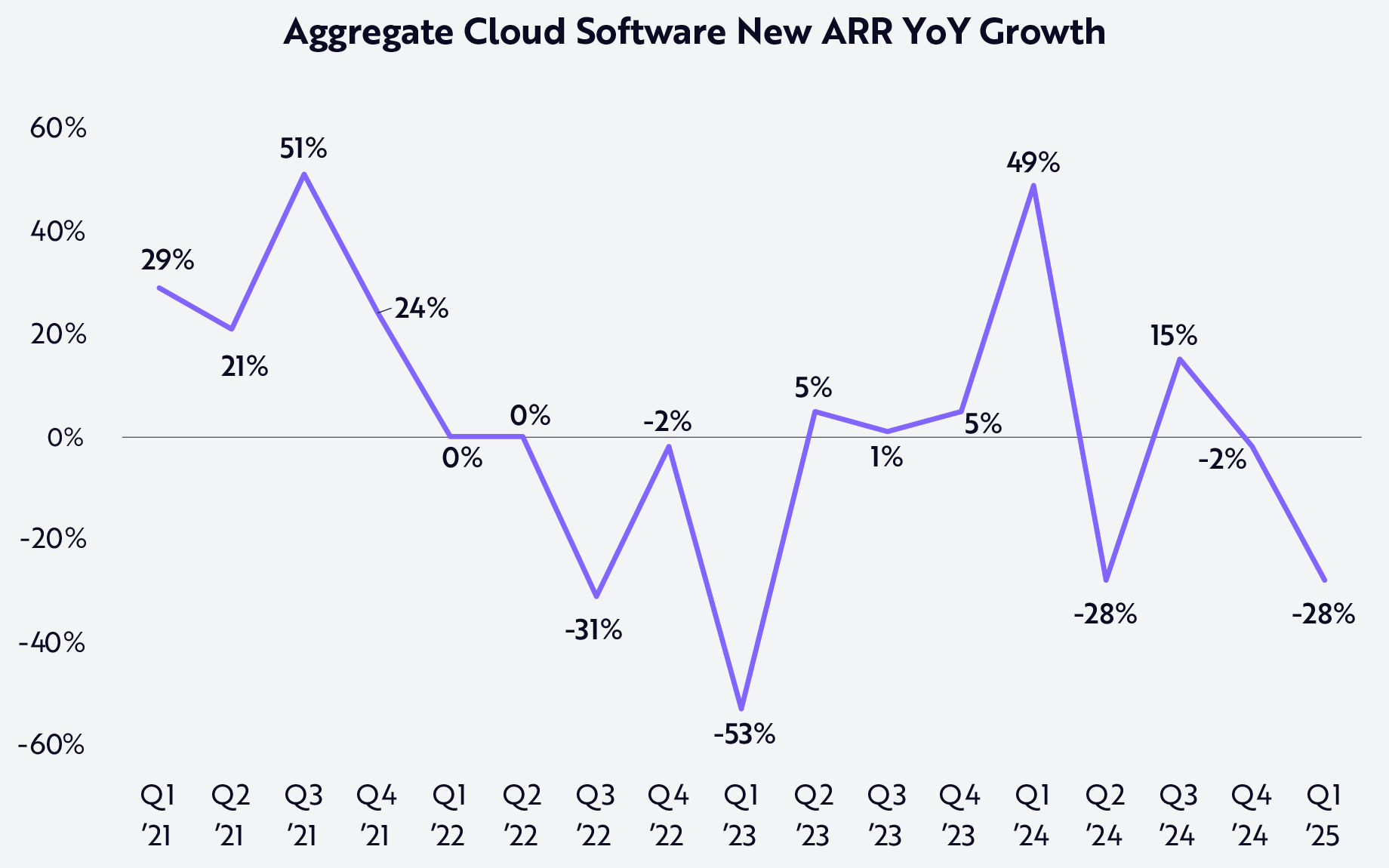

While remarkable in absolute terms, these growth rates are more impressive in the face of a 28% year-over-year contraction in net new ARR for public cloud software companies during the first quarter of 2025.

Source: Source: ARK Investment Management LLC, 2025, based on data from Ball 2025 as of June 30, 2025.8 For informational purposes only and should not be considered investment advice or a recommendation to buy, sell, or hold any particular security. Past performance is not indicative of future results.

In other words, public cloud software companies seem to be ceding share to their counterparts in private markets.

Implications For Software, The Cloud, And Knowledge Work

According to OpenAI’s CEO Sam Altman, current foundation models are the “stupidest” they ever will be.9 In our view, this comment applies both to the core models and to the products built around them. Given the democratization of core model intelligence, the product layer is becoming increasingly important.

As enterprises devote limited engineering resources to AI tools, lowering the cost of creating software, productivity should increase. Meanwhile, new classes of “AI-native” companies will put AI to work in new and interesting ways. Their products and internal operations should support more revenue with fewer employees than was possible previously. With fewer than 200 employees, for example, Cursor's valuation is ~$10 billion, suggesting more than $50 million in market value per employee.10 Traditional software moats could be at risk now that copying the feature set of competitors is easy. The ability to ship quickly to solve customer needs is essential, and the opportunity for winners could be massive.

Should these trends continue, software could be at the threshold of a Cambrian explosion. Just as the cloud lowered the marginal cost of operating software and created SaaS (Software-as-a-Service), generative AI could collapse the barriers to building software further. The buy-vs-build decision is likely to shift as AI makes possible at scale that which would not have made economic sense in traditional software development.

Importantly, the meanings of “developers” and “software“ are evolving. Now that the average businessperson can instruct AI agents with natural language, generative AI is expanding the number of people empowered to create software beyond developers with specialized computer coding skills.

Indirectly, the TAM (total available market) for software writers could expand as business users delegate tasks to AI agents and may not even be aware the agents are writing code behind the scenes to help them. Even today, OpenAI’s ChatGPT uses a python interpreter to write code and help users complete advanced data analysis tasks, and Anthropic’s Claude creates shareable apps and tools backed by code. In these cases, the “software” tools are more like consulting services: users request the completion of a task, and AI agents leverage an array of knowledge, tools, and code to complete them.

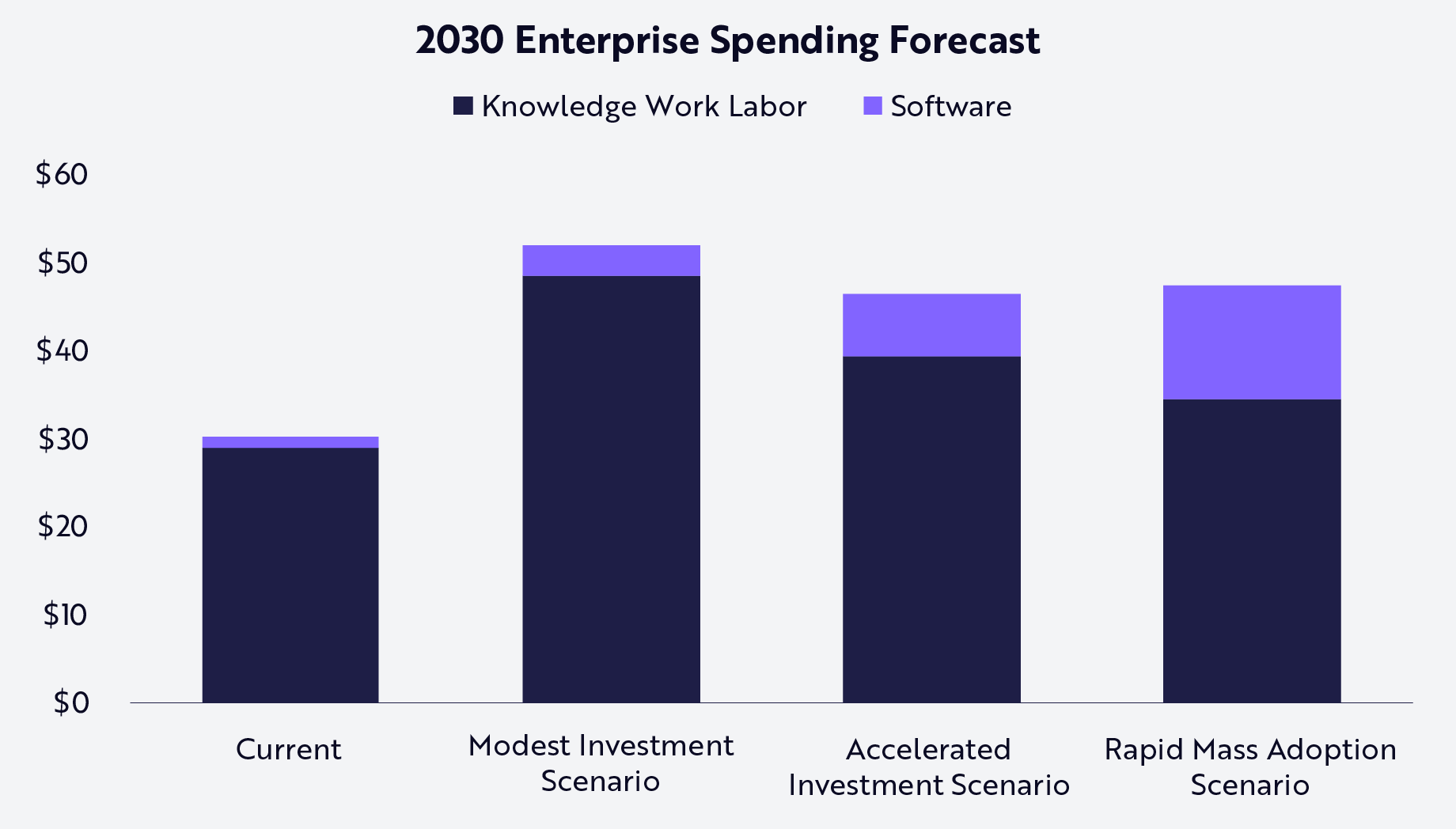

Today, global spend on software and cloud services is roughly $1.25 trillion.11 We believe AI-driven advances in performance and cost will accelerate software spending relative to global knowledge worker wages, which we estimate was ~$30 trillion in 2024.12 Software spend could accelerate from 14% in 2025 to 18-48% at an annual rate through 2030. At the midpoint of that range, the growth in annual spending on software could scale 33% at an annual rate, from $1.25 trillion today to $7 trillion by 2030, as shown below.

Forecasted Adoption Scenarios | |||

| Modest Investment | Accelerated Investment | Rapid Mass Adoption | |

| Annual knowledge worker employment growth | 6.3% | 3.2% | 1.3% |

| Percentage of current working time automated by 2030 | 31% | 61% | 81% |

| Reduction in productive working hours | 0% | 8% | 20% |

| Productivity surplus created* | $22 trillion | $57 trillion | $117 trillion |

| Value capture of productivity solutions** | 10% | 10% | 10% |

| New software revenue | $2.2 trillion | $5.7 trillion | $11.7 trillion |

| 2030 Software Market Estimate (current size + AI revenue) | $3.5 trillion 18% CAGR | $7 trillion 33% CAGR | $13 trillion 48% CAGR |

Note: “CAGR”: Compound Annual Growth Rate. *Traditional production statistics are unlikely to adequately capture the surplus created by AI software. **Value capture rates are likely to vary across scenarios based on competition in the market and other factors. The rate is held constant in these scenarios for simplicity. Source: ARK Investment Management LLC, 2025. This ARK analysis draws on a range of external data sources as of December 31, 2024, which may be provided upon request. For informational purposes only and should not be considered investment advice or a recommendation to buy, sell, or hold any particular security. Past performance is not indicative of future results. Forecasts are inherently limited and cannot be relied upon.

Notably, we expect AI-driven productivity to offset forward growth in wage spend, varying based on adoption scenarios, but do not foresee a decline in employed population. This expectation is contrary to claims of a “white-collar bloodbath” that wipes out large portions of the labor force.13 Our point of view is informed by examples of technology driven productivity gains, such as the advent of mechanized farming, which saw over the 20th century, from 1900 to 2000, a decline in percentage of the US labor force employed by farms drop from 40% to 1.9%. Over roughly the same period, farm output has doubled and the US labor force grew by nearly 6 fold, from 24 million in 1900 to 139 million in 1999.14 Perhaps “this time will be different” as the rate of change is seemingly so much faster. In our view, while we are near historical lows in unemployment, the burden of proof is on the labor pessimists. We believe it is more likely that we will see a reduction in annual human working hours by 20%—as shown in the “Rapid Mass Adoption” scenario above—than a reduction in labor force by 20%. A reduction of working hours in the face of productivity gains is, again, consistent with trends over the last 150 years.15

Source: ARK Investment Management LLC, 2025. This ARK analysis draws on a range of external data sources as of June 30, 2025, which may be provided upon request. For informational purposes only and should not be considered investment advice or a recommendation to buy, sell, or hold any particular security. Forecasts are inherently limited and cannot be relied upon.

In 2030, the software market could look much different than it does today in both predictable and unpredictable ways. Software in the AI age is likely to be more transformative than the transition from on-prem software with perpetual licenses to cloud-based subscription software. Most of the uncertainty revolves around application software: many incumbents will leverage AI to fortify their installed bases and data moats, but fast-moving AI-natives that obviate the need for legacy vendors could be highly disruptive.

Two areas of the market that should benefit disproportionately from this transition, fueled by proprietary data, are platform-as-a-service and infrastructure software solutions that are used to build, operate, and secure applications. Today, Palantir and Databricks are the leaders in this space. In addition, we believe new workloads should create significant demand for infrastructure, potentially benefiting the major cloud players—AWS, Azure, GCP, and Oracle—and a new breed of neo-cloud competitors like CoreWeave.

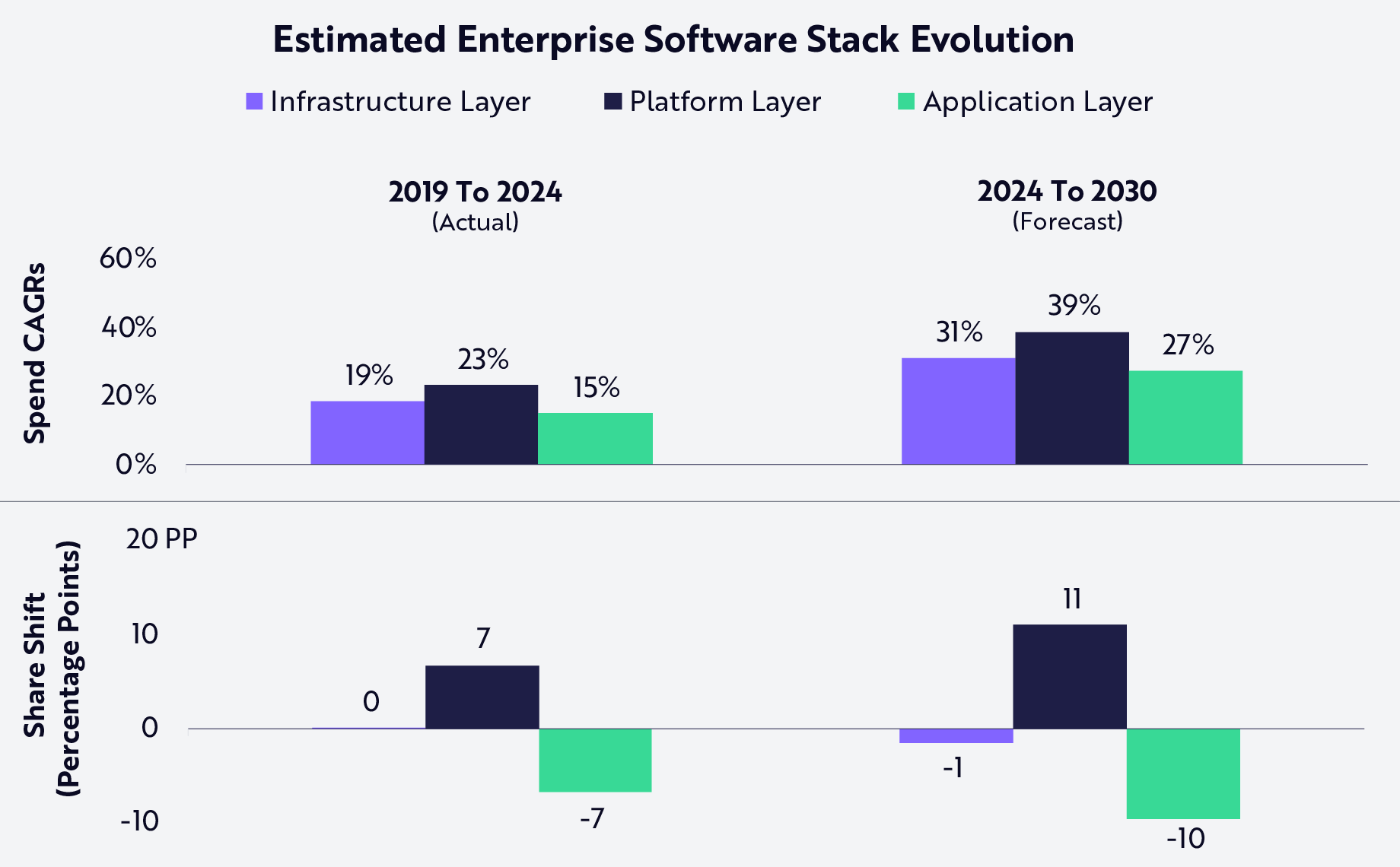

Already, during the past five years, revenue at the platform layer increased 22% at an annual rate, compared to 19% and 15% for the infrastructure and application layers, respectively. At the midpoint of our forecast for the next five years, those share gains should accelerate, the platform layer gaining 11 percentage points, to the detriment of application layer companies, as shown below.

Source: ARK Investment Management LLC, 2025. This ARK analysis draws on a range of external data sources as of June 30, 2025, which may be provided upon request. For informational purposes only and should not be considered investment advice or a recommendation to buy, sell, or hold any particular security. Forecasts are inherently limited and cannot be relied upon.

While likely to lose more share during the next five years, application layer software is unlikely to disappear and, in certain cases, could thrive. In fact, successful AI-native software applications like ChatGPT and Replit are emerging as new category leaders, as discussed above. That said, we believe a large incumbent base of application software—dominated by traditional enterprise resource planning (ERP) and customer relationship management (CRM) deployments and the largest portion of public cloud spending today16—will have to re-invent to capitalize on the AI age or face declining revenue growth in the face of AI-native competition.